openai api tutorial

prompt engineering

ai integration

chatgpt development

api implementation

OpenAI API Tutorial: Master Integration & Prompt Engineering

Breaking Ground: OpenAI API Fundamentals That Matter

This section lays the groundwork for your journey with the OpenAI API. We'll cover the essentials, from setting up your developer account and getting your API keys to understanding the core differences between the various models. This knowledge is crucial for effectively building AI-powered applications.

Choosing The Right Model For The Job

Picking the right model is paramount. The OpenAI API offers a range of models, each with its own set of strengths and weaknesses. GPT-4 shines in complex reasoning and nuanced language understanding, making it a perfect fit for tasks that demand higher-level cognitive abilities. GPT-3.5 offers a robust and cost-effective solution for many common applications, such as chatbots and content generation. Specialty models like DALL-E unlock creative possibilities with AI-generated images. This variety lets developers select the model that best suits their project's unique needs.

Authentication and API Keys: Safeguarding Your Access

Protecting your OpenAI API integration is just as important as selecting the right model. Your API keys are like digital credentials, providing access to OpenAI's powerful resources. Safeguarding these keys is non-negotiable. Best practices include secure key storage, using environment variables, and regular key rotation. Think of your API key like the key to your house—you wouldn't leave it lying around, would you? These practices minimize the risk of unauthorized access and misuse. For further guidance on managing costs associated with Large Language Models (LLMs), check out this helpful resource: How to master LLM pricing.

Understanding Tokens and Completions: The Building Blocks of API Communication

The OpenAI API uses tokens, representing raw text. These can be words, word fragments, or even punctuation. Understanding how tokens are calculated is essential for managing costs and optimizing API performance. Completions are the API's responses to your input or prompt. Mastering the relationship between tokens and completions is the key to effective API interaction. For instance, a longer prompt uses more tokens, potentially increasing costs. This makes efficient prompt design crucial.

Navigating OpenAI's Pricing Structure

OpenAI's pricing is typically based on token usage. Different models have different costs per token, and grasping this structure is essential for budgeting and cost control. You can avoid unexpected bills by closely monitoring token consumption and selecting the right model for the job. This planning allows developers to balance performance needs with budget constraints. OpenAI's rapid growth in the AI industry underscores the high demand for these services. OpenAI has achieved impressive growth, boasting over 100 million ChatGPT users by January 2023. The company's projected $11.6 billion revenue by 2025 speaks volumes about its impact. For a deeper dive into OpenAI's statistics, visit: OpenAI Statistics.

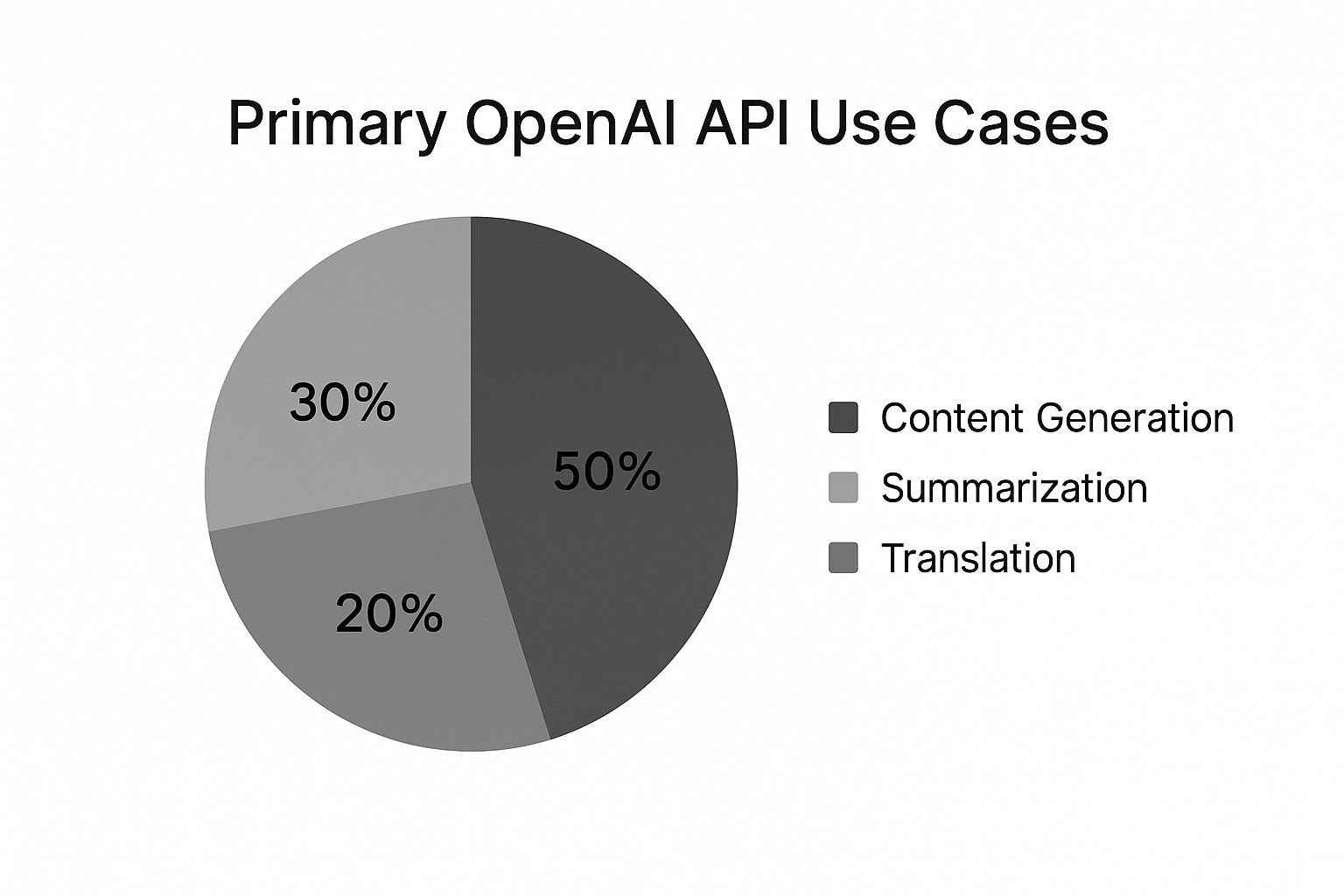

The following data chart visualizes key characteristics of several OpenAI models, comparing their token limits, relative costs, and performance.

As the chart illustrates, GPT-4 can handle considerably longer inputs than GPT-3.5, demonstrating significant differences in their token limits. The chart also reveals variations in relative cost and performance across the models, offering a snapshot of their strengths and trade-offs. This visualization emphasizes the importance of choosing the right model based on specific project requirements and budget.

To further clarify the differences between models, let's look at a detailed comparison:

OpenAI Model Comparison: This table compares features, capabilities, and pricing of different OpenAI models available through the API.

| Model | Token Limit | Use Cases | Relative Cost | Performance |

|---|---|---|---|---|

| GPT-3.5-turbo | 4,096 tokens | Chatbots, content generation, Q&A | Lower | Fast, good for general tasks |

| GPT-4 | 8,192 or 32,768 tokens | Complex reasoning, code generation, advanced content creation | Higher | Significantly better reasoning and performance than GPT-3.5 |

| DALL-E | N/A | Image generation from text prompts | Varies by image size | High-quality image generation |

This table summarizes key distinctions between popular OpenAI models, including token limits, typical use cases, relative costs, and overall performance characteristics. Choosing the appropriate model depends on the specific requirements of your project.

Your First OpenAI API Integration: From Code to Reality

This section bridges the gap between theory and practice, transforming abstract concepts into functional code that you can use immediately. We'll explore practical integrations using Python, JavaScript, and cURL, focusing on examples that actually work. This hands-on approach will give you the skills to begin building your own AI-powered applications. When integrating the OpenAI API, reviewing official documentation can be helpful. You might find useful information in Notaku's Docs.

Setting Up Your Development Environment

Before writing any code, ensure your environment is properly configured. This means installing the necessary libraries and setting up your API key.

- Python: Install the OpenAI Python library using

pip install openai. - JavaScript: Use a package manager like npm or yarn to install the OpenAI Node.js library.

- cURL: No specific library installations are needed for cURL, since it's a command-line tool. You will, however, need your API key readily available.

Making Your First API Call

Let's begin with a simple text generation example using the /completions endpoint. This endpoint is essential for a variety of applications, from generating creative text formats to answering questions.

To help you get started quickly, the following table summarizes the setup steps for different programming languages:

Language-Specific Setup Requirements

| Programming Language | Required Libraries | Setup Steps | Example Code |

|---|---|---|---|

| Python | openai | pip install openai | import openai openai.api_key = "YOUR_API_KEY" |

| JavaScript | openai | npm install openai or yarn add openai | const OpenAI = require('openai'); const openai = new OpenAI({apiKey: 'YOUR_API_KEY'}); |

| cURL | None | N/A | curl https://api.openai.com/v1/completions ... |

This table provides a quick reference for the necessary libraries and setup steps for each language. The example code snippets show how to initialize the OpenAI library and set your API key. Remember to replace "YOUR_API_KEY" with your actual API key.

- Python:

import openai

openai.api_key = "YOUR_API_KEY"

response = openai.Completion.create(

model="text-davinci-003", # Or a suitable model

prompt="Write a short story about a robot learning to love.",

max_tokens=100

)

print(response.choices[0].text)- JavaScript:

const OpenAI = require("openai");

const openai = new OpenAI({ apiKey: "YOUR_API_KEY" });

async function generateText() {

const completion = await openai.completions.create({

model: "text-davinci-003",

prompt: "Write a short story about a robot learning to love.",

max_tokens: 100,

});

console.log(completion.choices[0].text);

}

generateText();- cURL:

curl https://api.openai.com/v1/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer YOUR_API_KEY" \

-d '{

"model": "text-davinci-003",

"prompt": "Write a short story about a robot learning to love.",

"max_tokens": 100

}'These examples demonstrate how to perform a simple text generation task in different programming languages.

Handling Responses and Errors

The OpenAI API returns responses in JSON format. Understanding this format and how to parse it is important for accessing the generated text, usage information (like token counts), and other relevant data. Effective error handling is also essential. Network issues, incorrect API keys, or exceeding rate limits can all lead to errors. Implement appropriate error handling to ensure your application's resilience.

Structuring Requests for Reliability

Experienced developers structure their requests with reliability in mind. This includes strategies like setting timeouts to avoid indefinite waiting and implementing retry mechanisms to handle transient network problems. This proactive approach ensures your application gracefully handles unexpected interruptions. For example, if your application makes multiple API calls in sequence, a robust retry mechanism could be the difference between a minor issue and total application failure.

This image shows how different code components interact within a typical OpenAI API integration. Notice how user input flows through the application logic, interacts with the OpenAI API, and provides a response to the user. Understanding this flow helps you identify potential points of failure and create a more robust application.

Prompt Engineering: The Art Behind AI Excellence

Building on the foundation of API integration, let's explore prompt engineering. This crucial skill unlocks the true power of the OpenAI API. It's the art of carefully crafting input prompts to guide the AI toward the results you want. Mastering this often-overlooked skill significantly impacts the quality and relevance of AI-generated content.

The Power of Precise Prompts

Imagine an AI model as a highly skilled artist. Your prompt acts as the creative brief. A vague or poorly worded brief leads to unpredictable output. A clear, detailed brief, on the other hand, guides the artist towards your vision. Similarly, even small changes in your prompt can dramatically change the AI's response.

For instance, asking for a "story" will produce very different results compared to asking for a "story about a robot learning to love." The more specific your prompt, the more tailored and effective the AI's output. Precision is key.

Advanced Prompting Techniques

Several advanced techniques can further enhance your prompt engineering. Few-shot learning, for example, involves including a few examples of the desired output within the prompt itself. This helps the AI grasp the pattern and generate similar content.

Another technique, chain-of-thought prompting, guides the AI through a step-by-step reasoning process. This is particularly helpful for complex tasks that demand logical thinking. These techniques help unlock the full potential of OpenAI models, leading to more precise and tailored content. For more advanced techniques, explore our guide on How to master prompt generation.

Addressing Hallucinations and Prompt Injection

AI models can sometimes produce inaccurate or nonsensical information, often referred to as hallucinations. Additionally, malicious users might attempt prompt injection, carefully crafting inputs to manipulate the AI's behavior.

Implementing guardrails, such as input validation and output filtering, helps prevent these issues. These measures ensure the AI's responses are both relevant and safe. Think of these safety measures as the boundaries you set within the creative brief, keeping the artist on track.

Maintaining Consistent AI Personalities

Maintaining a consistent AI persona is especially important for applications like chatbots. Careful prompt crafting can guide the AI towards a specific style, tone, and language. This consistency creates a more engaging and believable user experience.

For example, a chatbot for a medical company needs to maintain a professional and informative persona. This builds user trust. Consistency contributes to a more human-like interaction, fostering a better user experience.

Adapting Your Approach

Different models and use cases require different prompting strategies. A prompt that works well with GPT-3.5 might not be optimal for GPT-4 or DALL-E.

Tailor your prompts to the specific model's capabilities and the requirements of your application. This adaptable approach ensures efficient resource use and optimal performance. Just as an artist uses different techniques depending on the medium, you'll need to adjust your prompting approach based on the specific AI model. This leads to more effective and tailored results.

Building Conversational AI That People Actually Use

Moving beyond the technical intricacies of the OpenAI API, crafting engaging conversational AI experiences becomes an art. It's more than just connecting API calls; it demands understanding user interaction and strategic design. This is where theoretical knowledge transforms into practical application, creating chatbots and conversational agents people genuinely enjoy.

Designing Engaging Conversational Flows

Building a chatbot isn't simply about conveying information; it's about creating a natural, engaging dialogue. Imagine a conversation with a friend. It should flow smoothly, remain contextually relevant, and offer value. This necessitates careful planning of conversational flow, where each interaction builds upon the last. For instance, a chatbot for an online store shouldn't just ask, "What are you looking for?" It should offer personalized suggestions, remember past preferences, and guide the user towards their desired product.

Mastering Multi-Turn Conversations

Many basic chatbots fail after the first exchange because they can't handle multi-turn conversations. True conversational AI remembers past interactions and uses that context to inform future responses. Imagine asking a friend a question, only for them to forget your previous statement moments later – frustrating, right? Contextual memory is crucial for effective conversational agents, allowing the AI to maintain a coherent and human-like interaction across multiple turns.

Memory Management and Token Usage

Remembering context is crucial, but managing that memory efficiently is equally vital. Storing every interaction can quickly inflate token usage and increase costs. This is where strategic memory techniques become essential. Developers can employ methods like summarizing past exchanges or selectively storing key data, maintaining context without excessive token overhead. Think of it like note-taking – you jot down the important points, not every single word. This optimized approach balances conversation history with efficient token management.

Handling Unexpected Input: Fallback Strategies

Even the most well-designed conversational AI will encounter unexpected user input, from typos to off-topic questions. Robust fallback strategies are crucial for a positive user experience. These strategies could involve asking clarifying questions, offering helpful suggestions, or gracefully admitting a lack of understanding. A well-crafted fallback can transform a potentially frustrating interaction into a helpful one.

Content Filtering and Responsible AI

Open conversations are engaging, but responsible AI practices are paramount. Implement effective content filtering to prevent the AI from generating harmful or inappropriate responses, safeguarding your users and protecting your application's reputation. Balancing open conversation with responsible content filtering creates a positive and safe user environment.

This image illustrates a typical conversation flow in a well-designed chatbot. The user's questions are answered clearly and concisely, with the chatbot proactively offering helpful information and suggestions. This approach promotes user engagement and satisfaction. By combining these techniques – engaging conversation flows, multi-turn discussions, efficient memory strategies, fallback mechanisms, and content filtering – you can build conversational AI that not only functions correctly but also keeps users returning. This creates a richer, more valuable user experience. For further strategies on improving prompt effectiveness, see our guide on Prompt improvement.

Advanced Techniques That Transform Good APIs Into Great Ones

Building upon the fundamentals of the OpenAI API, this section explores advanced strategies to significantly elevate your API implementations. These techniques are the secrets seasoned developers use to create truly exceptional AI-powered applications.

Fine-Tuning for Specialized Tasks

While pre-trained models like GPT-3.5 and GPT-4 offer impressive versatility, fine-tuning allows you to customize these models for specific tasks. This focused training can dramatically improve performance, particularly for niche applications or specialized language domains.

For example, imagine building a medical diagnosis assistant. Fine-tuning a model on medical texts can significantly improve its accuracy and relevance. Furthermore, fine-tuning often reduces token consumption, lowering operational costs. This means a more specialized model can be more cost-effective in the long run.

Caching Strategies to Optimize Performance

Frequent calls to the OpenAI API can accumulate costs and increase latency. Strategically implementing caching mechanisms stores API responses for frequently used prompts. This dramatically reduces response times and minimizes unnecessary API calls.

Think of it like saving a frequently visited website to your browser bookmarks. You access it instantly without needing to search again. Caching allows your application to quickly retrieve previously generated content, improving user experience and reducing costs.

Token Optimization: Doing More With Less

Tokens represent the raw text processed by the OpenAI API. Optimizing token usage not only lowers costs but also often improves performance. Techniques like prompt simplification, removing redundant words, and using shorter prompts can deliver surprisingly better results with fewer resources.

This efficient approach maximizes the value of every API call. For further improvement tips, you might find this helpful: How to master prompt improvement.

Combining API Calls for Complex Problem Solving

Single API calls are powerful, but combining multiple calls unlocks even greater potential. Think of it like assembling building blocks. Each individual block has a specific function, but combined, they can create a complex structure.

Similarly, chaining different API calls creates sophisticated AI workflows that solve problems beyond the scope of a single prompt. For example, one API call could summarize a large text, while a second call translates the summary into another language. This allows you to tackle complex tasks by breaking them down into smaller, manageable components.

Performance Optimization and Error Handling

Latency can significantly impact user experience. Implementing performance optimization techniques, such as asynchronous API calls and minimizing blocking operations, can reduce latency. In some cases, these techniques can achieve reductions of up to 70%.

Robust error handling is also crucial. Network issues, rate limiting, and unexpected input can all lead to errors. Implementing effective error handling ensures your application gracefully handles these unpredictable events, providing a seamless user experience even when problems arise. This proactive approach builds resilience into your applications.

Real-World Success Stories: OpenAI API in Action

This section explores how organizations are using the OpenAI API to tackle real-world business problems. Through practical case studies, we'll examine implementations across various sectors, providing valuable insights and inspiration for your own OpenAI API projects. This is where the practical application of OpenAI API tutorial concepts truly comes to life.

Scaling Content Creation While Maintaining Quality

Many content platforms struggle to scale content production while maintaining high quality. The OpenAI API offers a compelling solution. One platform used GPT-3 to generate initial article drafts, freeing up their writers to focus on editing and refining the content. This resulted in a 30% increase in content output while upholding quality standards.

This achievement highlights the power of the OpenAI API for content creation. Overcoming the initial challenge of training the model to adhere to their specific style guidelines demonstrated the API's adaptability.

Enhancing Customer Support With AI Assistance

Customer support often involves answering repetitive questions, a task easily automated. A customer service company integrated the OpenAI API to provide instant responses to common inquiries.

This freed up human agents to handle more complex issues, leading to improved customer satisfaction and a 15% reduction in support costs. Integrating the API required careful prompt design to ensure accurate and helpful AI responses, highlighting the importance of prompt engineering.

The team successfully overcame the challenge of integrating the AI with their existing CRM system by developing a custom integration solution.

Personalizing User Experiences Through AI-Powered Recommendations

Personalized recommendations are crucial for many online platforms. An e-commerce company used the OpenAI API to generate personalized product descriptions based on individual user preferences.

This resulted in a 10% increase in sales conversion rates, demonstrating the effectiveness of personalized, AI-generated content. A key challenge was ensuring data privacy and security, which they addressed by implementing secure data handling practices. The ability to dynamically adjust the AI's output based on changing user behavior proved vital to their success.

Streamlining Operations Through Automated Data Analysis

Data analysis is fundamental for informed decision-making. A financial institution used the OpenAI API to automate the analysis of financial reports.

This allowed their analysts to focus on strategic interpretations, resulting in a 20% increase in efficiency. The initial hurdle was integrating the API with their existing data infrastructure, overcome through careful planning and a phased rollout. This success highlights how the OpenAI API can augment human expertise.

Lessons Learned and Key Takeaways

These case studies offer valuable lessons for successful OpenAI API implementation:

- Strategic Planning: Defining clear objectives and a practical integration strategy is critical.

- Prompt Engineering: Mastering prompt engineering is key to guiding the AI towards desired outcomes.

- Cost Control: Careful management of token usage and appropriate model selection optimize costs.

- Integration With Existing Systems: Integration requires careful planning and potential custom development.

- Continuous Optimization: Regular evaluation and fine-tuning of AI models ensures optimal performance.

These real-world examples provide practical insights into successful OpenAI API applications across diverse industries. These stories serve as both inspiration and a practical guide, sharing valuable lessons learned from those who have navigated the journey of OpenAI API development. Ready to transform your ideas into reality and launch your own AI-powered applications? AnotherWrapper provides the tools and resources to help you get started quickly. With customizable demo applications, seamless integrations, and essential services, AnotherWrapper simplifies the development process, allowing you to focus on innovation. Visit AnotherWrapper today and begin building the future of AI.

Fekri